As i wrote in my other article, i´m busy with a weird problem. When i set a hard IOPS limit on one VM, all VMs in the same datastore experience high storage latency.

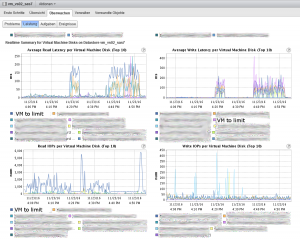

How this looks like, you can see here:

(Sorry, had to anonymize the VM names.)

*The VM which i want to limit is marked as “VM to limit” in the graphs.

- In normal Operations this one VM requests up to 3000 IOPS which is too much for us. Therefore we want to limit this.

- At 4:19pm i limit the IOPS of this one VM to 500.

- Immediately after doing this all VMs on the same datastore experience high latencies.

- At 4:24pm i removed the IOPS limit.

- Immediately this one VM goes up to 5500 IOPS and the latency of all VMs on the datastore drop down.

I opened a case about this and worked with VMware support for a solution. Since now this problem isn´t solved, but at least i know why this happens. After sending a lot of log files to the VMware support and letting them access my environment to see the problem by themselves they accepted this as error and gave the data to the engineering. After a few weeks i got the following answer: “When limiting the IOPS of a VM which resides on a NFS datastore the I/O scheduler of the ESXi host is told to cause a artificial latency an that VM. Due to a failure in programming the world id of the VM which had to be slow down is not passed to the I/O scheduler. Therefore the scheduler assumes a VM world id of 0 which means he slows down all VMs on that datastore. This error only occurs on NFS storage. On block storage datastores the IOPS limits works as expected.”

VM support did then something really astonishing for me. They created especially for me a developer build of a ESXi installation ISO containing the quick fix. With this developer build i was able to test if the fix works as we wanted it to do. We found out that the development department did a good job and the fix worked. It will be included in the next minor version upgrade of the 6.0 branch of ESXi. (Hopefully Q3/2017)

Strange thing i learned while working on this case is that VMware development (and everyone else) seems to concentrate on block storage rather than file storage. Since i asked a lot of #vexperts about this problem i always got the answer that IOPS limits in VMware worked for them. So i assume they all using block storage and not file storage (NFS) as storage backend.

Maybe it´s time to switch back to iSCSI…